From Chalkboards to AI

Setting Boundaries: Developing AI Policies for Science Classrooms

By Christine Anne Royce, Ed.D., and Valerie Bennett, Ph.D., Ed.D.

Posted on 2024-11-11

Disclaimer: The views expressed in this blog post are those of the author(s) and do not necessarily reflect the official position of the National Science Teaching Association (NSTA).

Artificial Intelligence (AI) is transforming how we live and work, and the education sector is no exception. As AI continues to develop, it has the potential to significantly enhance science classrooms, helping students engage in real-world scientific practices. With AI’s rapid development, schools must establish policies to manage how students and teachers use this powerful tool. Whether helping with research, simplifying complex data analysis, or offering personalized tutoring, AI can enhance learning—but only if we understand and use it responsibly.

The Need for a Policy

First, it is important to determine if your district has an AI policy, then to decide if that policy meets your needs or if there are additional components that would be required for your courses. For science educators, crafting an AI policy isn’t just about managing technology: It’s about using this tool in a meaningful and productive way. AI isn’t a one-size-fits-all solution, and while it offers amazing opportunities, it also presents challenges. Just as you set expectations regarding laboratory safety, you need to establish boundaries and guidelines for students and review them with the students regularly. Without guidelines, students might rely excessively on AI, which undermines their problem-solving skills. Or worse yet, they could use it unethically—for instance, to generate answers for assignments or lab reports with no understanding of the content or connection to supporting evidence.

Here’s where a well-structured AI policy comes in. Having clear expectations ensures that AI is used as a supplement to or support for learning and that the technology will not drive the instruction or be used as a shortcut. Teachers and students need to understand when AI can be beneficial and when it can be detrimental to the learning process.

Furthermore, an AI policy can help teachers and schools address the growing concerns about privacy, data security, and ethical use. A good policy will set the stage for a balanced approach to using the tool, ensuring that AI is a positive force in the classroom without infringing on ethical or privacy standards.

A Range of Uses: Understanding AI as a Tool

To craft an effective policy, we must start by understanding that AI is a tool, not an all-knowing entity. Like a calculator used in a math, AI can simplify tasks, making data analysis or simulations more accessible. But just as calculators don’t replace a student’s need to learn arithmetic, AI doesn’t supersede the critical thinking, creativity, and hands-on experience students need when learning science.

When doing data analysis in a chemistry lab, for example, AI can help students quickly crunch numbers or identify trends in large datasets. This allows students to spend more time interpreting results and less time on tedious calculations. However, before immediately using AI, students should understand the foundational concepts behind those calculations. Otherwise, they miss the opportunity to develop the analytical skills needed. Therefore, any policy must treat AI as an aid, and the reasons for its use must be intentionally selected.

An effective AI policy offers a spectrum of acceptable uses for AI, tailored to various tasks and purposes. This approach allows students to benefit from AI’s capabilities while setting boundaries to preserve the learning process.

Crafting Your Own AI Policy

Every classroom is different, and science classrooms often have their own level of differentiation, so what follows are general suggestions to consider as you develop your policy.

Clarity and Transparency. Ensure the policy clearly outlines what constitutes acceptable and unacceptable use of AI. Be explicit about when students must do tasks manually and when they can use AI. This may require an explanation for each project in the classroom as well as general statements about never using it to generate answers for homework.

For example, when developing content for a town hall meeting in which students discuss ideas related to wind farms, students might be permitted to use AI to help research specific newspaper articles about the pros and cons of wind farms. Students could also brainstorm the list, then check to see if AI provides additional recommendations. However, once the research is conducted, the student must demonstrate that they verified the results produced, then generate their own set of talking points from the research for the town hall meeting. The policy clearly outlines that while AI can assist in the research phase, students must do the final creative and presentation work manually.

Ethical Considerations. Address issues like plagiarism and intellectual property infringement. Students should be required to cite any AI-generated work as a source, even if it’s just an outline or summary. Make it clear that claiming AI’s work as their own is not permitted, and when components or ideas generated with AI are used, students should cite them as sources as they would with any reference material or statistical tool. The American Psychological Association (APA) has already developed guidelines for citing AI programs.

An example might be the following: After participating in a chemistry lab and analyzing the data, students may be tempted to use AI to generate a summary and conclusion of the results rather than demonstrating their own insights and understanding. In this situation, students would be using AI unethically by misrepresenting the AI work as their own, which would be considered plagiarism.

Privacy and Data Security. Since many AI tools collect data, include guidelines on protecting student privacy. Ensure that students know which tools are approved for use and why.

Considerations. This area relates to the need for teaching media literacy and digital safety. Students need to be taught about personal information protection and engaging in safe behaviors when using digital tools. If you need more information in this area, the National Association for Media Literacy Education (NAMLE) provides recommendations. (NSTA is a founding member of NAMLE’s National Media Literacy Alliance.)

Levels and Stages of Use. Tailor the AI policy to different levels of learning. High school students, for instance, might have more freedom to use AI than middle schoolers do. It is also important to tailor the specific use levels within the instructional and learning process.

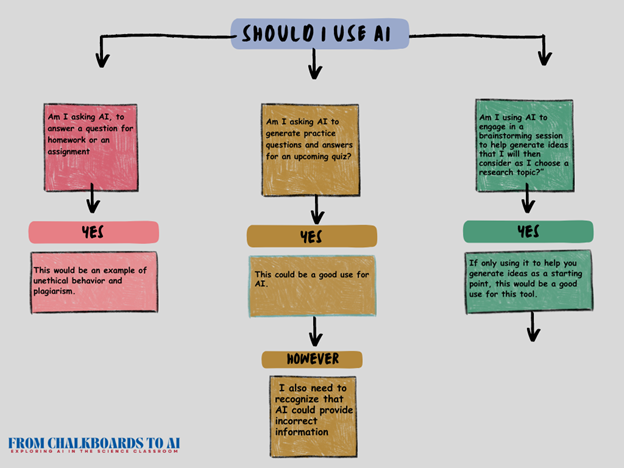

Strategy. While direct guidance is needed at certain points, developing a spectrum of guidelines might help students more routinely. Creating a chart that illustrates this spectrum with a series of questions could help students consider the reasons and purposes for using the technology and make informed decisions.

If you are working with department, school, or district policy, it is also important to consider the following concepts.

- Teacher Support. Provide teachers with resources to understand the AI tools being used so they can effectively monitor their students’ work and offer guidance. Regular workshops or professional development sessions can help teachers stay updated.

- Flexibility. Technology is always evolving, and so should the policy. Schedule regular reviews to update the policy as AI tools improve or as new tools become available.

If you need a starting point, TeachAI, a collaboration of several organizations and nonprofits, has an editable document that provides some solid beginning points for consideration. It can be located here.

Conclusion: Balancing Opportunity With Responsibility

AI is a powerful tool, and its presence in science classrooms is inevitable. By crafting an AI policy, educators can help students use AI responsibly and ethically, ensuring it enhances their learning rather than hindering it. The goal is not to stifle innovation but to promote critical thinking, problem solving, and ethical use of technology. By treating AI as a supportive or supplemental tool—rather than a substitute for learning—we can prepare students for the challenges and opportunities of the future, while still fostering their growth as curious, engaged learners.

Establishing clear, thoughtful guidelines on when and how to use AI in science classrooms will help students harness its potential while ensuring they stay grounded in the fundamentals of scientific inquiry.

Christine Anne Royce, Ed.D., is a past president of the National Science Teaching Association and currently serves as a Professor in Teacher Education and the Co-Director for the MAT in STEM Education at Shippensburg University. Her areas of interest and research include utilizing digital technologies and tools within the classroom, global education, and the integration of children's literature into the science classroom. She is an author of more than 140 publications, including the Science and Children Teaching Through Trade Books column.

Valerie Bennett, Ph.D., Ed.D., is an Assistant Professor in STEM Education at Clark Atlanta University, where she also serves as the Program Director for Graduate Teacher Education and the Director for Educational Technology and Innovation. With more than 25 years of experience and degrees in engineering from Vanderbilt University and Georgia Tech, she focuses on STEM equity for underserved groups. Her research includes AI interventions in STEM education, and she currently co-leads the Noyce NSF grant, works with the AUC Data Science Initiative, and collaborates with Google to address CS workforce diversity and engagement in the Atlanta University Center K–12 community.

Note: This article is part of the new blog series From Chalkboards to AI, which focuses on how artificial intelligence can be utilized within the classroom in support of science as explained and described in A Framework for K–12 Science Education and the Next Generation Science Standards.

The mission of NSTA is to transform science education to benefit all through professional learning, partnerships, and advocacy.